March 31st

- jesseemmothmusik

- 31 mars

- 3 min läsning

I thought that merging audio buffers would be a piece of cake, given that all functionalities except the actual memory copy of the audio buffers were already present. While I wouldn’t say that I encountered any big difficulties per say, I did encounter a feature not behaving the way I expected.

After some hours(!) of trying to look at the fancy parts of my code where I move pointers and write binary data ( and where I of course suspected the bug to be hiding) I found a strange line in my code.

I was creating an AudioBuffer with the size of what the actual binary buffer should have and by that confusing the audio buffer of ints (or actually shorts) that are used inside the program with the audio data buffer that gets written to an audio file in binary mode.

Anyway, the feature is now live in the application and the user can merge two audio regions to a new Wave file.

Time

A big topic in my editor has been the to be or not to be of a time axis in the edit area. The end game has always been to include this, but in the context of this specialization project there simply isn’t enough time to implement all that a timeline feature would need.

Returning to the feature of gluing regions together, this means that the visual empty space between two regions does not affect in any way how the merged audio buffer looks; there is no sense of time, the audio regions are just floating around in a void space on screen.

Lanes

I added the ability to move regions with snapping between lanes (which really should be called tracks but since there is no time yet…) by taking in account the movement y wise. The implementation of this is very simple and revolves around not caring about which lane a region is in but simply caring about where the region wants to go. If the delta movement is almost a lane’s height then it’s time to move the region by exactly a lane’s height.

WAVEIO

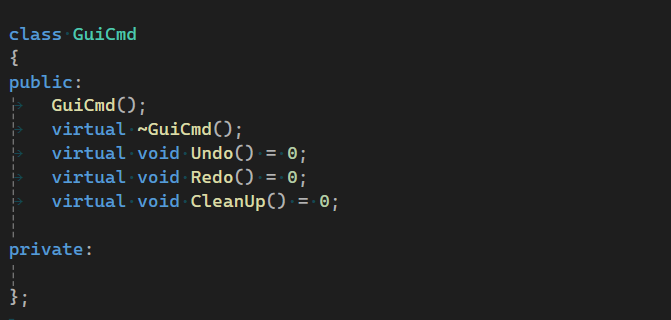

The inevitable did happen at last. My big efforts in keeping the GUI application and all the audio classes from WAVEIO separated proved to be for nothing. In the end I couldn’t protect WAVEIO from the big mouth of the GUI…

Jokes aside, WAVEIO is the name of my main singleton class via which the audio editor project has to go in order to access audio functionality. This is good as it makes initialization more stream lined; initialize WAVEIO and you will initialize all associated audio classes!

Wrapping up

This is the last week of the project and I’m therefore very hesitant to add more features to my program. One thing I have yet to do is to try using my own .wio files in a game environment. That is, using the WAVEIO library in my regular game engine and having total control over the process of handling audio assets.

There are a lot of things I’d like to do with this. I mean, having the audio editor run from inside my engine would mean that a user can edit .wio files on one screen while trying them out in-game on a second later on another screen.

I could also use the editor to create variations of an sfx and take advantage of the “one buffer, multiple regions” structure I’m using for creating round-robin for common sound effects in a game.

Anyway, first I’m going to do some serious testing of my library before trying it out in the game engine. Hopefully I’ll be finished by friday!