February 21st

- jesseemmothmusik

- 10 mars

- 2 min läsning

Uppdaterat: 28 mars

So, the journey has started. I’m diving (head first) into audio programming during the specialization course at The Game Assembly in Malmö.

I am going to document my progress here as often as possible during these seven weeks. It will be a great way to summarize to myself what I’ve actually achieved and process the problems I encounter as well as, I hope, a good way to share my experience with the verse.

What I Have

I'm using my own game engine as a starting point for development. The engine has a basic component system, a working rendering pipeline and asset handling. I probably won't use much of this for the moment, but I will be great to keep this around if I need any functionality.

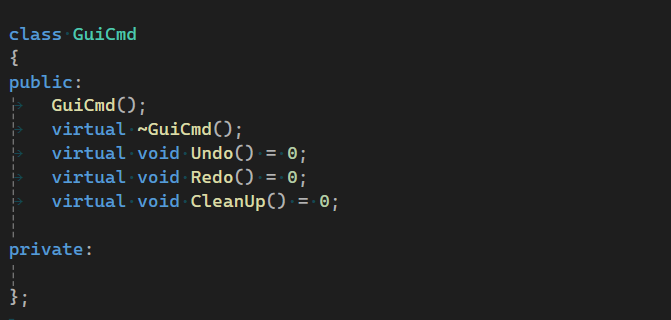

I will basically create two new modules in my visual studio projects where one represents the library and the other represents the GUI application. I will additionally have to add functionality to my existing graphics engine for rendering in a strictly 2D mode (for visualizing waveforms, fades etc.).

First Thoughts

This first week has been mostly about planning out the whole project and “staring at a piece of paper” to get my thoughts straight about the code design and the purpose.

I found a really good example of when I needed a special tool during production of our previous game Spite Catharsis. I was working with synchronizing SFX to VFX and decided to scrap all code based timing systems (with delays and stuff) and instead rendered the audio files with the delay inside them. This proved to be a very tedious and inefficient strategy and I really felt I needed some kind of editor that could be tied to the game engine and write data about synchronization.

So this is basically what I’m going to make. Or at least begin creating, as the finished product won’t fit into this seven week project. I will at least dive into basic handling of raw audio and basic DSP-functions. Can’t wait!