March 4th

- jesseemmothmusik

- 10 mars

- 2 min läsning

After a generous amount of hours spent on writing and reading audio data (just .wav files for now) I am now entering the land of fun stuff.

Yesterday I made an easy wrapper for Xaudio2 which I’ll be using as a playback system in the application. I want to keep my own structures around audio so I don’t tie myself to a specific library. I use a struct for keeping the necessary .wav-file specific stuff and a buffer of audio data as ints elsewhere. This is much like how Xaudio2 does it and makes for an easy conversion between the two.

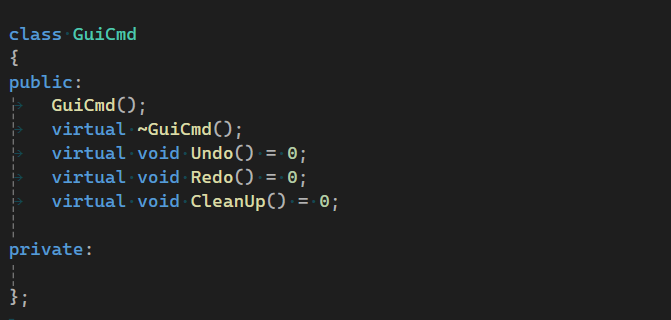

I’ve split my project into different classes with specific tasks. The WaveHandler will be my file reader and writer, the WavePlayer is my wrapper for Xaudio2 for playing back audio and the DSPEngine will handle all modifications to the audio data. The main application project called AudioEditor will handle UI and interactions with the user.

Some restrictions I’ve decided on already is to just implement support for 16-bit PCM audio read from and written to .wav files. An upgrade to the WaveHandler so the user can change this format is of course a want-to-have.

The DSPEngine can handle gain changes and fade-in / fade-out right now. I also want to be able to split and merge audio regions. This will introduce the notion of regions into my project and brings up some design questions about what a region is and what kind of relationship it has to a .wav file or a buffer of audio data, for which I don’t yet have an answer. To be continued!